AI Bias in Body Type: The Hidden Fashion Challenge

TL;DR

AI bias in body type recommendations is reshaping how fashion tech perceives beauty. This article explores its roots, real-world impact, and how platforms like Glance build more inclusive and confidence-driven AI personalization models.

When Algorithms Learn Our Bodies

AI in fashion is no longer a distant dream—it’s woven into how we discover, visualize, and buy outfits. But as personalization deepens, so does an important question: What happens when artificial intelligence learns our bodies—but not our diversity?

The rise of AI bias in body type recognition has raised new ethical challenges in personalization. While it helps users find better fits, it can unintentionally reinforce narrow ideals of attractiveness, especially for users with diverse or non-Western body types.

A March 2024 arXiv paper titled “Bias in Generative AI” presented evidence that generative image models exhibit bias against women and African Americans.

This isn’t just a tech issue—it’s an inclusivity issue.

The Anatomy of AI Bias: How It Forms

Bias in fashion AI starts where data starts — the datasets.

1. Training Data Gaps

AI learns body proportions, shapes, and silhouettes from existing visual datasets. However, most publicly available datasets—like ImageNet or DeepFashion—are dominated by Western-centric body types, leaving gaps for diverse Indian, African, or Southeast Asian silhouettes.

A review on AI and the “representation gap” in fashion notes that many fashion AI systems are trained on datasets lacking diversity in body shapes and skin tones

2. Algorithmic Generalization

Even when inclusive data exists, AI models generalize to “average” fits that perform well across global datasets. The problem? “Average” doesn’t mean accurate. For example, Glance’s approach rejects this one-size-fits-all assumption by training its recommendation systems on regional user feedback loops and contextual learning patterns.

3. Societal Reinforcement

AI bias doesn’t emerge in isolation—it mirrors human bias. Beauty filters, influencer trends, and fashion imagery online continue to shape what AI models learn to prioritize.

Behavior Meets Bias: How AI Interprets Body Type

In fashion personalization, body recognition often extends beyond simple measurements. AI systems analyze cues from posture, outfit choices, and engagement patterns. For instance, fast scrolling or hovering over certain styles informs algorithms about user preferences.

Yet, these subtle behavioral cues can amplify bias if interpreted incorrectly. For example:

- Longer dwell time on a certain outfit might signal admiration—or curiosity about a trend the user doesn’t fit into.

- Frequent avoidance of body-hugging clothes could be read as disinterest, when it’s often comfort-driven preference.

That’s why ethical fashion AI needs interpretive nuance, not just pattern detection.

Case in Point: AI Bias in Real-Life Fashion Tools

Several studies highlight this ongoing concern:

- Bias in AI Fashion Recommendations: A review on AI and the "representation gap" in fashion notes that many fashion AI systems are trained on datasets lacking diversity in body shapes and skin tones.

- Challenges in Size Inclusivity: A recent Vogue Business survey of 687 fashion consumers in the US and UK reveals that inconsistent sizing and poor fit are major deterrents to purchasing fashion items—nearly as impactful as cost and quality.

- Consumer Preferences for Body Representation: Consumers strongly want adjustable and inclusive designs to accommodate body fluctuations, expressing willingness to pay more for better fit and higher-quality materials.

Such trends risk excluding millions of users from feeling seen or represented.

Ethical Fashion Tech: What Glance Does Differently

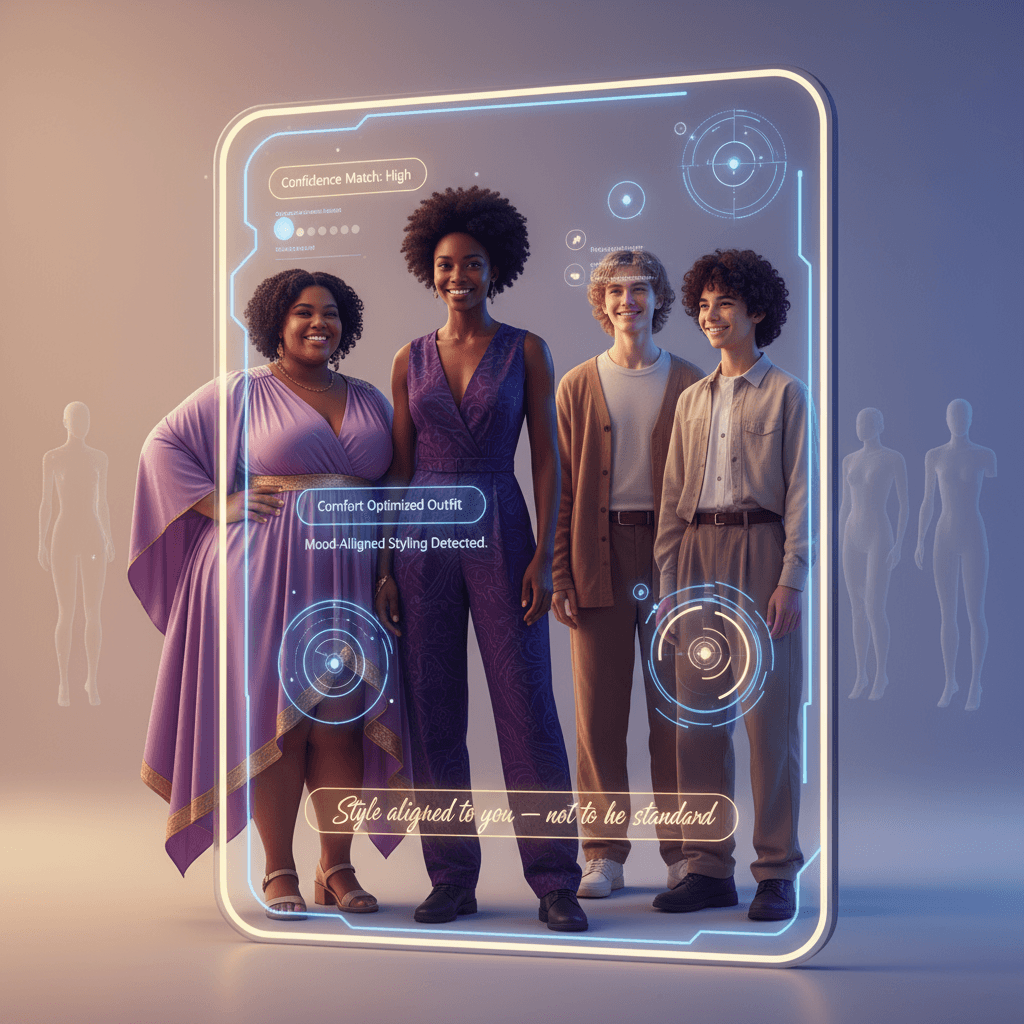

Glance’s personalization system is designed around inclusivity-first intelligence. Instead of static sizing or “fit prediction,” Glance uses behavioral understanding through its AI Twin—a dynamic, learning model that reflects the user’s evolution, not a stereotype.

It interprets subtle engagement signals like:

- Scroll speed (to gauge scanning vs. interest)

- Timing patterns (to align recommendations with user energy cycles)

- Visual attention (to assess emotional resonance of styles)

Unlike conventional models, Glance doesn’t categorize users into rigid “body type labels.” Instead, it learns the evolving relationship between comfort, context, and confidence, making every recommendation progressively adaptive.

Inclusive Design: Beyond Size to Confidence

AI bias doesn’t just affect visual representation—it influences how people feel. Especially among younger users, biased recommendations can affect self-esteem and body perception.

A UNICEF industry toolkit on advancing diversity, equity, and inclusion notes that unrealistic beauty norms in online gaming can distort children's perceptions of self, leading to confidence issues, mental health problems, and self-harm.

Glance approaches this issue by focusing on “fit confidence,” not “fit conformity.” It promotes styling that aligns with mood, comfort, and professional setting instead of idealized templates—helping strengthen body confidence for teens and adults alike.

Visual Bias Audit: Measuring and Correcting AI Errors

Fashion brands and AI developers are increasingly turning to bias audits—structured reviews of datasets and algorithmic behavior—to detect disparities.

Bias Type | Common Source | Mitigation Method |

Shape Bias | Unbalanced datasets | Synthetic dataset balancing |

Skin Tone Bias | Poor lighting calibration | Contrast-normalized inputs |

Regional Fit Bias | Over-representation of Western samples | Localized data collection |

Engagement Bias | Misread scroll behavior | Behavior-context labeling |

According to a Gartner report, by 2028, over 20% of digital workplace applications are projected to incorporate AI-driven personalization algorithms, aiming to create adaptive experiences that enhance worker productivity.

This shift from “black-box” AI to transparent and explainable AI (XAI) is essential for fashion ecosystems that influence identity.

The Indian Body Type Challenge

Indian consumers often face the most misrepresentation in global AI fashion models due to data localization gaps. Factors like regional genetics, fabric comfort, and climate-based preferences alter how clothes fit and fall.

As the Indian market leads the world in mobile-first shopping, localized fashion intelligence—like Glance’s behavioral adaptation model—is crucial for bridging this body-data gap.

Changing Body Shape and Adaptive AI

Human bodies are dynamic. From growth and aging to fitness shifts, changing body shape is natural. Static AI models fail when they don’t evolve with the user.

Glance’s self-learning AI ensures recommendations evolve with life stages, adjusting proportions, styles, and even color preferences over time. This human-centered AI personalization turns body change into fashion fluidity, not friction.

5 Key Takeaways for Ethical Fashion AI

- Bias begins with data—diversify image and behavioral datasets across demographics.

- Explainability matters—users should understand why AI made a recommendation.

- Inclusivity by design—create experiences that represent all shapes and tones.

- Continuous auditing—bias detection must be iterative, not one-time.

- Empathy-driven personalization—build AI systems that learn with the user, not about them.

Conclusion

AI-driven fashion and outfit personalization hold tremendous promise — but only if they include all body types. When bias sits at the heart of recommendation systems, it doesn’t just mis-fit clothes — it mis-shapes self-image. Ethical AI in body-type fit is about more than algorithms: it’s about trust, representation and body confidence. Platforms must ask: Are we building tools that evolve with every body, not just model ones?

For more on how to dress for your body type with AI assistance, check out our guide: How to Dress for Your Body Type with Glance AI

FAQs

Q1: What is “AI bias” in body-type recommendations?

It’s when recommendation systems favour certain body types or silhouettes because their training data or logic is skewed, resulting in less accurate or inclusive suggestions.

Q2: Why is this especially important for Indian body types?

Indian body types may differ in terms of height, waist-hip ratio, torso lengths and changing body shape patterns. If AI models don’t account for this, recommendations are less relevant and less effective.

Q3: Can outfit personalization be fair for all body types?

Yes — with inclusive datasets, transparent algorithms, and feedback systems, outfit personalization can serve a wide spectrum of body types equitably.

Q4: What should styling platforms do to reduce bias?

They must train models on diverse body data, make recommendation logic visible, adapt with user feedback and consider cultural/region-specific body metrics.

Q5: How does AI bias affect body confidence for teens?

Teens are especially sensitive to fit and representation. If AI suggests styles that assume a narrow “ideal” body shape, teens may feel excluded, reducing body confidence and engagement.